Make it Simple

The Primary Imperative

It is my belief that the majority of software engineers possess a drive to craft excellent software (perhaps that is a tad optimistic, but I'm an optimist to the end). There are a bundle of qualities that makes software excellent. Code that is easily read, easily tested, and easily deployed. We should be able to change one area in the code, without it breaking something else. We shouldn't have to read an enormous swath of the codebase to understand all the pieces duct-taped together and held in balance by that one line of code we need to change in order to fix a bug.

Even more than all of that, excellent software should be secure, durable, available, scalable, and cost-effective. It should provide value to current and future users. We should be able to reshape it with minimal cost and minimal delay as the needs of customers and the business evolve, rapidly, over time, again and again.

The canon of Software Engineering self-improvement literature -- published books, blog posts, podcasts -- is packed with a near limitless supply of concrete ways to implement excellent software: rules, techniques, guidelines, patterns, architectures. However, I think there is a tendency to get caught up on these topics. Overly fixated. Tunnel visioned. Cargo-culted. People nitpick over the details or get bogged down into dogma. One example is the fanatic debate between those who argue for microservices vs those who argue for modular monoliths vs those who try to argue for some mix of the two. Others debate object-oriented vs functional programming. Others dither over frameworks, languages, design patterns, testing practices, and so forth.

Anything that can be argued about by software engineers will be argued about no matter how fruitless the argument's outcome.

In my experience, the majority of software engineers want to craft excellent software, but often lose sight of the single most important quality that ensures sustained excellence.

Let's crack open a massive tome called Code Complete 2 and see what the author has to say:

Managing complexity is the most important technical topic in software development. In my view, it's so important that Software's Primary Technical Imperative has to be managing complexity.

Ah, Software's Primary Technical Imperative. You can't get much more emphatic than that. But I'm going to try:

Simplicity -- or, a minimum of complexity -- is the most important thing to obsess about in the technical domain of software engineering. Simplicity is the quality we should affix to the top of our consciousness each time we attempt to solve a problem or place our fingers above a keyboard to tap out some code. Study it. Meditate on it. Make it a mantra.

Make it Simple.

Make it Simple

Make it Simple

Simple vs Easy

Simple.

Hmm, let's be clear about the definition of this word. Rich Hickey's excellent talk "Simple Made Easy" [Hickey] provides an apt breakdown of the meaning of simplicity and complexity.

The etymology of the terms provides a powerful visualization. "Simple" is derived from sim (one) and plex (fold) -- a single fold or braid. "Complex" is derived from the Latin complecti (to entwine around), which is based on "plectere" (to braid).

[Image Credit: Alberto Gasco, Unsplash]

Braided. Folded. Entwined. Good, visceral words that convey meaning with a powerful mental image. Complexity is the tight interweaving of concerns -- coupling -- while simplicity suggests a minimum of coupling. By these definitions, simple and complex become objective measures: you can count the number of coupling points in a software system. Whether something is simple or complex is not a matter of intuition or feeling -- it can be quantified.

Hickey contrasts simple with easy -- which is derived from a meaning "near at hand." Nearness is relative: what is close to one person at one position, may be far from someone else at a different position. For software engineering, easy might refer to a concept or codebase one already understands, or a skill they already have studied and practices. Easy is relative. What is effortless to the senior engineer who is intimately familiar with the codebase and problem domain, may take days of strenuous effort for the new hire straight out of college.

Complexity proliferates because simple solutions are hard, and easy solutions tend to accumulate tech debt. Simple solutions are hard because they require the discipline to do more upfront design work and think critically about the problem; they require practiced skill to implement correctly; they require reading unfamiliar code; they require using unfamiliar tools; they require communicating with teammates or people from other teams; they may require learning new concepts or technologies. Software engineering itself is hard, and only getting harder with each passing year [Etheredge].

The pressure to deliver software under tight deadlines has enormous influence over the decision between simple and easy. This leads to a trap, to a snare of tech debt, baited by the idea that you can trade off software quality for faster delivery speed. But that is not the case.

Developers find poor quality code significantly slows them down within a few weeks. So there's not much runway where the trade-off between internal quality and cost applies. Even small software efforts benefit from attention to good software practices, certainly something I can attest from my experience. [Fowler]

One of my new favorite quotes compares the difference between mere programming (creating a unit of code in one moment in time) and the discipline of software engineering:

Software engineering is programming integrated over time [Winters, et al]

We need to consider the compounded effects of multiple changes accumulating cruft in the system over time. To build excellent software, we must balance the needs of the system today with the needs that will be present over its lifetime. To address those needs with a minimum of cost to those future engineers and maintainers of the system (including yourself), the system must be built to be simple and it must be kept simple. As Hickey suggests, if we build our software to be simple, using techniques that promote simple design, development in our software can become easy.

Cognitive Load

Why does simplicity matter so much?

The human brain is a powerful processor optimized for creative thinking, pattern matching, intuitive leaps of thought via unexplored connections between unrelated concepts so that it develops new models and modes of thinking about the real world.

However, the brain has some limitations. It suffers a similar bottleneck as a modern CPU: the L1 cache. It's really, really, really small.

Our squishy brain's little cache is called working memory [Wikipedia - WM]. The mind can only hold 4-7 chunks of discrete pieces of data at a time. Trying to cram too many "chunks" at once is overwhelming. The brain must exert more effort handling "cache misses" by fetching from long term memory, cycling the data in and out (again, very similar to problems faced by CPUs in high-performance applications). This effort is called cognitive load.

I like to think of my own mental stamina as mana (as in, the "RPG" concept of a player's finite pool of magical power to use to cast spells). I begin each day with a finite pool of mana available to cast spells -- I mean, write code. If the codebase I am working in is clean and well-designed, I can devote my mana to more powerful activities -- like writing good code, designing an elegant solution, crafting good tests, untangling knots of business logic, identifying patterns, making creative leaps of thought, experiencing breakthroughs on a difficult design problem. Or I can devote my mana to an endurance run, and spend it on easier tasks for a longer day of coding.

If the code base is complex and tightly coupled, and if I experience more cognitive load, then I will have to use more mana to do even simple tasks, and ultimately accomplish less. The ceiling for the great things I could do in the code base is lower because I don't have enough total mana to both handle the complexity and make a breakthrough. What's more, my mind will become fatigued earlier in the day, and I may become sloppy and make some mistakes. Even if the code review process or CI/CD testing catches those mistakes, the overall task is now delayed because of the asynchronous iterative rounds from this longer feedback loop.

(I still haven't found a ring or bathrobe that provides me with more max mana or mana regeneration, so I guess I have to find another way to maximize my limited mana pool).

The CPU and mana analogies should accomplish two things: first, confirm I am a huge nerd, and second, illustrate my point that high cognitive load prevents software engineers trying to get shit done. I will further demonstrate that it sucks more for the company employing those dutiful software developers.

Let's compare brains to CPUs again, but this time in the context of cost. An experienced engineer in Seattle or San Jose is going to run you around $150-200K per year. Factoring in benefits and expenses (the coffee and tea in the breakroom ain't exactly free), let's say $300K per year. Looking at this online chart of AWS EC2 instances, the most expensive cloud compute is some unnecessarily extravagant monster called a P3DN 24xlarge, which comes packed with 768 GB of memory and 96 vCPUs, and costs about $312,101/year if for some reason you want to run it on demand with the Windows operating system. At first glance it would seem this beast is a little more expensive than an engineer -- except this isn't a fair comparison: the average software engineer only has one brain (not 96 of them!). Per computational unit, a human is 100x more expensive than one CPU from one of the most astronomically ridiculous machines AWS will let you lease.

A more modest m5.4xlarge, with 64 GB and 16 vCPU, put on a reserved plan and running Linux will set you back $265 per CPU per year -- about 1/1000th the cost of a developer.

| Annual Cost | Annual Cost per Computational Unit | |

|---|---|---|

| P3DN 24xlarge, On Demand, Windows | $312,1101.28 | $3,251.00 |

| m5.4xlarge, Reserved, Linux | $4,239.84 | $264.93 |

| Experienced Software Engineer | $300,000.00 | $300,000.00 |

This isn't even the most critical cost to consider. We must also factor in an expense that can impact a company with several orders of more magnitude: opportunity cost. What opportunities must a business delay or abandon because its software developers spend most of their mana on dealing high cognitive load inflicted by rampant unmanaged complexity?

This is why simplicity matters.

Decomposition

Simplicity is the quality we want, but there must be some general framework of how make software simple. Fortunately, this framework has been around for a while.

In 1971, David Parnas wrote "On the Criteria To Be Used in Decomposing Systems into Modules." I cannot recommend this paper highly enough, so please read it, or at least the summary on The Morning Paper -- or better still, both. The paper contains a critical insight into a general heuristic of how to develop simple software:

- Get a strong understanding of the problem (this problem could be of any scope -- a new architecture, a new microservice, a new API, or even just a bug fix)

- Break the problem into independent sub-problems

- Encapsulate the sub-problems into modules that hide the details of the implementation

- Re-compose those modules to form a solution

- Repeat this process until you have reached an ideal composition

At first blush, might seem excruciatingly obvious. Something you have read in blogs and books many times over. Please, I encourage you to consider this with fresh eyes and an open mind. Behind the seemingly "obvious" are the subtle truths that unlock a deeper appreciation and understanding for simplicity.

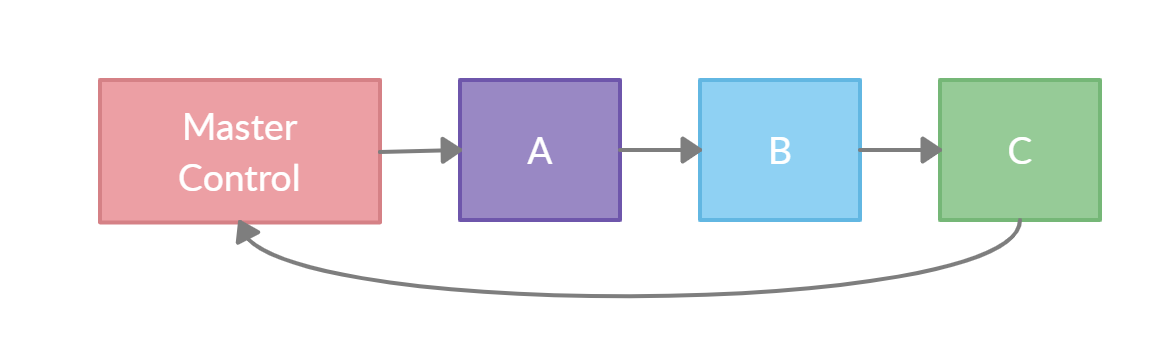

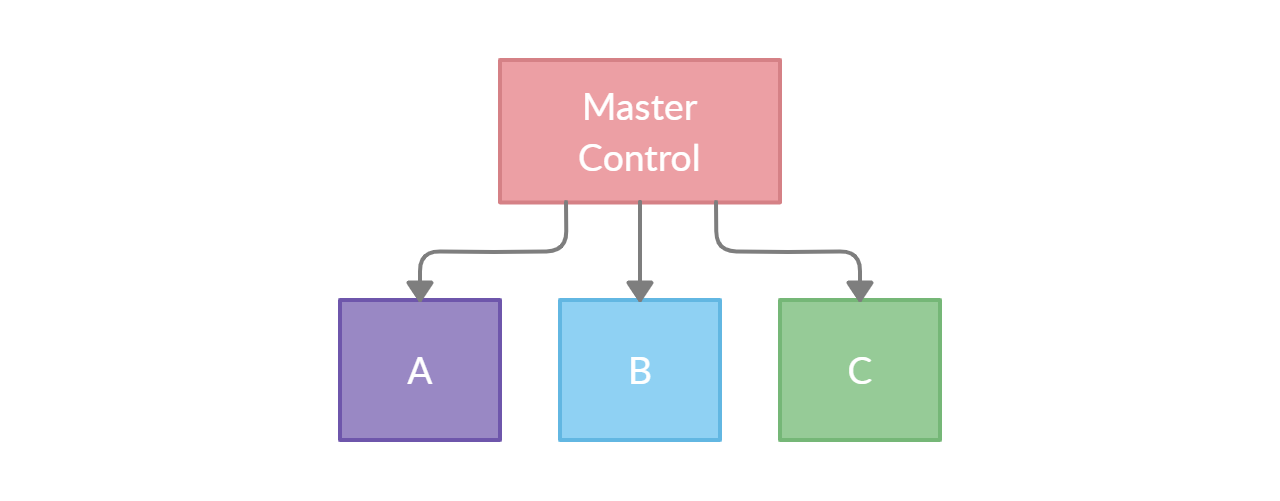

I'm going to summarize bits of Parnas's paper to help illustrate the point. The paper describes a software problem, and compares two solutions, with two different decompositions. The first uses a procedural step-by-step approach, using an orchestrator to chain together modules that solve one bit of the problem. Each module knows the specific details of the input and output data defined by this specific problem, and depends on the data provided by the module before it. Parnas calls this a "flowchart" approach.

The second solution also uses an orchestrator to put together modules. These modules also solve one little bit of the problem. The critical difference is that instead of building up these modules to solve chunks of the problem at hand, they are instead designed to solve an indepenent, generically defined subproblem. The solution to each generic subproblem is expressed as an interface, which acts as a simplified guide to solving the problem. The module hides the details of the implementation, which provides two advantages: first, any code that uses the module does not entangle itself with those complex implementation details; second, the human code writer who is using the module does not need to hold those implementation details in their head, they only need to think about the interface.

Finally, instead of chaining the modules together so that they must be aware of each other, and depend on their position in the chain, the "Master Control" handles passing data between them and ensures they work in the order they are arranged. If the module interfaces are simple and self-describing, then the "Master Control" almost becomes a thin, easy-to-read description of the overall solution. If the first solution is a flow chart, then the second is more of a directed acyclic graph.

Both solutions will get the job done, and both are equally viable -- until you apply changes over time. Parnas demonstrates six different requirements introduced to the system. In the first decomposition, each feature requires changes in at least two modules (and sometimes all of them!). In the second, only a single module needs to change.

The paper concludes with the summation:

We propose instead that one begins with a list of difficult design decisions or design decisions which are likely to change. Each module is then designed to hide such a decision from the others. Since, in most cases, design decisions transcend time of execution, modules will not correspond to steps in the processing. To achieve an efficient implementation we must abandon the assumption that a module is one or more subroutines, and instead allow subroutines and programs to be assembled collections of code from various modules.

This is also a critical point, because it is a guide for where these interfaces and modules are going to add value.

- Difficult design decisions (i.e. solutions or business logic with a high degree of essential complexity, or complexity that is inherient to its nature)

- Code that is likely to change together : If one code A changes whenever B changes, then they have a high degree of cohesion. They belong in the same module. Our goal should be to decompose our problems, then identity the sub-problems with high cohesion, group them together, and design interfaces and modules around then.

Now I want to be very clear about my message here. Modules or abstractions are great. Design patterns and techniques that use these things are wonderful. But they are subservient to the main point: nothing is more critical to the effort of managing complexity than the proper decomposition of problems.

It’s not simply about having lots of small modules, a large part of the success or otherwise of your system depends on how you choose to divide the system into modules in the first place. [Colyer]

Decomposition is king. Everything else pays homage to this point.

Modules

Humans have an easier time comprehending several simple pieces of information than one complicated piece. The goal of all software-design techniques is to break a complicated problem into simple pieces. The more independent the subsystems are, the more you make it safe to focus on one bit of complexity at a time. [McConnell]

Assuming you have optimally decomposed your problem, now you may employ the elements of modular design to create these simple pieces, and then assemble those pieces into a solution. In many respects, I believe ideal software development should almost feel like building stuff with Legos, where you are both builder and Lego-piece maker.

[Image Credit: Nathan Dumalo, Unsplash]

The tenets of modular design are simple and general

- Use a simple, spare interface to hide the details of a single difficult design decision or a portion of the design that is highly likely to change on its own

- Develop the interface around an abstract idea that describes the general solution so engineers don't need to think to hard or know the underlying details.

McConnell has this to say on that last point:

An effective module provides an additional level of abstraction -- once you write it, you can take it for granted. It reduces overall program complexity and allows you to focus on one thing at a time. [McConnell]

You can implement modular design at any level and scope of the tech stack: classes and functions, packages and libraries, services and applications, even single lines of code. You can write modules in the frontend or the backend. You can use any language. You can use an object-oriented style or functional style. You can use a monolith or set of microservices.

Finally, I will argue that modular interfaces are far more powerful if they are written with a self-describing language based on the problem domain. It makes them more readable and understandable. Your code becomes an expression of the domain itself. In this regard, I'd like to call out that Domain Driven Design is a good framework for decomposition and modular design.

Conclusion

To write simple software, understand the problem domain, decompose it down to a set of sub-problems, encapsulate them in independent modules, and then compose up the full solution out of those pieces.

Let me be clear that I am not trying to sell this as a silver bullet. Those don't exist. Silver bullets are intended to be fast and easy, whereas what I propose is hard. It takes discipline, both for yourself, and for the team you build software with.

Speaking of silver bullets, is fun to take a moment and revisit the imagination behind Frederick Brook's popular analogy: you have a mild-mannered software project exposed to the luminous light of unmanaged complexity, and it gradually transforms into a snarling werewolf rampaging through the countryside. The quickest way to slay the beast would be to shoot it with a silver bullet. What could be easier? All you need to do is load silver slugs into your rifle, take aim, and fire from a safe distance. No need to get your hands dirty. Alas, as Brooks writes, it's only a fantasy. Complexity is inherent to our technology, our problem domains, humanity, and the physical world itself. We will never be able to eliminate it.

But we can manage it.

So I'll go as far as to assert decomposition and modular design are silver-plated daggers. To slay the werewolves, we must get up close and personal, face-to-face with complexity, and we must struggle and fight. It won't always be easy. But maybe, we can make it simple.

References

[Brooks] Frederick P. Brooks, "No Silver Bullet - Essence and Accident in Software Engineering" - http://faculty.salisbury.edu/~xswang/Research/Papers/SERelated/no-silver-bullet.pdf

[McConnel] Steve McConnell, "Code Complete 2" - https://www.amazon.com/Code-Complete-Practical-Handbook-Construction/dp/0735619670/ref=sr_1_2?dchild=1&keywords=code+complete+2&qid=1603837652&sr=8-2

[Hickey] Rich Hickey, "Simple Made Easy" - https://www.infoq.com/presentations/Simple-Made-Easy/

[Etheredge] Justin Etheredge, "Why does it take so long to build software?" - https://www.simplethread.com/why-does-it-take-so-long-to-build-software/

[Fowler] Martin Fowler, "Is High Quality Software Worth the Cost?" - https://martinfowler.com/articles/is-quality-worth-cost.html

[Winters, et al] Titus Winters, Tom Manshreck, Hyrum Wright, "Software Engineering at Google: Lessons Learned from Programming Over Time"

[Wikipedia - WM] Wikipedia, "Working Memory" - https://en.wikipedia.org/wiki/Working_memory (Note that this is a theoretical concept, but one that is surely proven out in the daily experience of most software engineers)

[Wikipedia - CL] Wikipedia, "Cognitive Load" - https://en.wikipedia.org/wiki/Cognitive_load

[Parnas] David L. Parnas, "On the Criteria To Be Used in Decomposing Systems into Modules" - https://www.win.tue.nl/~wstomv/edu/2ip30/references/criteria_for_modularization.pdf

[Colyer] Adrian Colyer, "On the criteria to be used in decomposing systems into modules" - https://blog.acolyer.org/2016/09/05/on-the-criteria-to-be-used-in-decomposing-systems-into-modules/